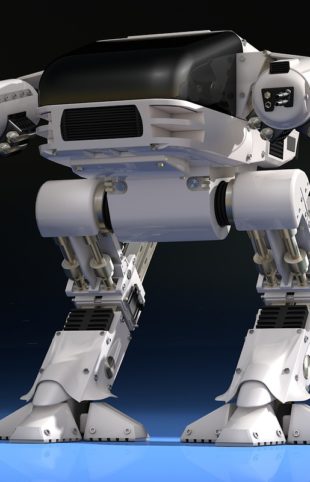

Autonomous weapons are weapons that can operate on their own and perform life-and-death decisions on the battle field, including “selecting and engaging targets without meaningful human control.” They are also known as autonomous robots or “killer robots” and include devices such as small drones (like quadcopters), large drones (like Predator drones), unmanned vehicles, four-legged robots (ranging in size from dog-sized to horse-sized), and humanoid robotic soldiers. As the technology to enable truly autonomous weapons has emerged in recent years, many have called for a ban on this technology or severe limitations. The debate surrounding autonomous weapons centers around a few sub-questions: Do autonomous weapons make the world less or more safe? Can they be exploited by terrorists or rogue states? How do these robots respond in unclear situations? Can a ban be effectively enforced when this technology may become ubiquitous, as in the case of small quad-copter drones? Would the fact that robots can take risks that humans cannot make any difference, for example allowing them to wait to fire until fired upon? Is their lack of emotions a liability or an asset on the battlefield? Will autonomous weapons lead to more casual decisions regarding going to war and engaging the use of military force? Absent a ban, will there be an arms race with autonomous weapons? If we adopt a wait and see approach, will we have waited too long? Can robots act with the same moral agency as humans and is this important? Does human dignity depend on us reserving life-and-death decisions only to humans? Should there be some element of meaningful human control over autonomous weapons in consideration of various moral questions involved here? Do human soldiers really have that great of a human rights record in war zones and should that be factored in?

“It will only be a matter of time until they appear on the black market and in the hands of terrorists, dictators wishing to better control their populace, warlords wishing to perpetrate ethnic cleansing, etc. Autonomous weapons are ideal for tasks such as assassinations, destabilizing nations, subduing populations and selectively killing a particular ethnic group. We therefore believe that a military AI arms race would not be beneficial for humanity. There are many ways in which AI can make battlefields safer for humans, especially civilians, without creating new tools for killing people.”

Toby Walsh, professor of artificial intelligence at the University of New South Wales, Australia: “These will be weapons of mass destruction. One programmer and a 3D printer can do what previously took an army of people. They will industrialize war, changing the speed and duration of how we can fight. They will be able to kill 24-7 and they will kill faster than humans can act to defend themselves.”

“We’re not going to be able to prevent autonomous armed robots from existing. The real question that we should be asking is this: Could autonomous armed robots perform better than armed humans in combat, resulting in fewer casualties on both sides?”

“no letter, UN declaration, or even a formal ban ratified by multiple nations is going to prevent people from being able to build autonomous, weaponized robots. The barriers keeping people from developing this kind of system are just too low. Consider the “armed quadcopters.” Today you can buy a smartphone-controlled quadrotor for US $300 at Toys R Us. Just imagine what you’ll be able to buy tomorrow. This technology exists. It’s improving all the time. There’s simply too much commercial value in creating quadcopters (and other robots) that have longer endurance, more autonomy, bigger payloads, and everything else that you’d also want in a military system.”

“the most significant assumption that this letter makes is that armed autonomous robots are inherently more likely to cause unintended destruction and death than armed autonomous humans are. This may or may not be the case right now, and either way, I genuinely believe that it won’t be the case in the future, perhaps the very near future. I think that it will be possible for robots to be as good (or better) at identifying hostile enemy combatants as humans, since there are rules that can be followed (called Rules of Engagement, for an example see page 27 of this) to determine whether or not using force is justified. For example, does your target have a weapon? Is that weapon pointed at you? Has the weapon been fired? Have you been hit? These are all things that a robot can determine using any number of sensors that currently exist.”

“It’s worth noting that Rules of Engagement generally allow for engagement in the event of an imminent attack. In other words, if a hostile target has a weapon and that weapon is pointed at you, you can engage before the weapon is fired rather than after in the interests of self-protection. Robots could be even more cautious than this: you could program them to not engage a hostile target with deadly force unless they confirm with whatever level of certainty that you want that the target is actively engaging them already. Since robots aren’t alive and don’t have emotions and don’t get tired or stressed or distracted, it’s possible for them to just sit there, under fire, until all necessary criteria for engagement are met. Humans can’t do this.”

“Unmanned robotic systems can be designed without emotions that cloud their judgment or result in anger and frustration with ongoing battlefield events.”

“The ability to act conservatively: i.e., they do not need to protect themselves in cases of low certainty of target identification. Autonomous armed robotic vehicles do not need to have self-preservation as a foremost drive, if at all. They can be used in a self sacrificing manner if needed and appropriate without reservation by a commanding officer. There is no need for a ‘shoot first, ask-questions later’ approach, but rather a ‘first-do-no-harm’ strategy can be utilized instead. They can truly assume risk on behalf of the noncombatant, something that soldiers are schooled in, but which some have difficulty achieving in practice.”

“Intelligent electronic systems can integrate more information from more sources far faster before responding with lethal force than a human possibly could in real-time.”

“When working in a team of combined human soldiers and autonomous systems as an organic asset, they have the potential capability of independently and objectively monitoring ethical behavior in the battlefield by all parties, providing evidence and reporting infractions that might be observed. This presence alone might possibly lead to a reduction in human ethical infractions.”

Autonomous weapons systems will further lower the threshold for initiating war, thus making it a less politically costly and more likely option than ever before.

“I do agree that there is a potential risk with autonomous weapons of making it easier to decide to use force. But, that’s been true ever since someone realized that they could throw a rock at someone else instead of walking up and punching them. There’s been continual development of technologies that allow us to engage our enemies while minimizing our own risk, and what with the ballistic and cruise missiles that we’ve had for the last half century, we’ve got that pretty well figured out. If you want to argue that autonomous drones or armed ground robots will lower the bar even farther, then okay, but it’s a pretty low bar as is. And fundamentally, you’re then placing the blame on technology, not the people deciding how to use the technology.”

“The key question for humanity today is whether to start a global AI arms race or to prevent it from starting. If any major military power pushes ahead with AI weapon development, a global arms race is virtually inevitable, and the endpoint of this technological trajectory is obvious: autonomous weapons will become the Kalashnikovs of tomorrow. Unlike nuclear weapons, they require no costly or hard-to-obtain raw materials, so they will become ubiquitous and cheap for all significant military powers to mass-produce. It will only be a matter of time until they appear on the black market and in the hands of terrorists, dictators wishing to better control their populace, warlords wishing to perpetrate ethnic cleansing, etc.”

“The case for banning entire classes of weapons rests on moral grounds; practicality has nothing to do with it. To suggest that because a technology already exists, as has been the case with every class of weapon banned today—or because there are low barriers to its development, which is a growing problem with biological weapons—is sufficient reason to oppose a ban entirely neglects the moral foundations upon which bans are built, and opens the door for a line of reasoning that precludes any weapon ban at all. Weapons are not banned because they can be, but because there is something so morally abhorrent about them that nothing can justify their use. This brings us to one of the most fundamental points driving the campaign to ban lethal autonomous weapons systems: “Allowing life or death decisions to be made by machines crosses a fundamental moral line.” As recent events in Syria tragically demonstrate, weapons bans are not and never will be watertight, but it is not these practicalities that drive the ban. The prohibition on chemical weapons underscores the overwhelming belief that their use will never be acceptable, and the same reasoning applies to a ban on lethal autonomous weapons. Enforcing a ban on autonomous weapons systems might be difficult, but these difficulties are entirely divorced from the moral impetus that drives a ban. Similarly, discussing the potential difficulties of enacting a ban, or the current state of the technology, should be separate from discussing whether or not lethal autonomous weapons systems should join the list of prohibited weapons.”

“

“The group’s warning that autonomous machines ‘can be weapons of terror’ makes sense. But trying to ban them outright is probably a waste of time. That’s not because it’s impossible to ban weapons technologies. Some 192 nations have signed the Chemical Weapons Convention that bans chemical weapons, for example. An international agreement blocking use of laser weapons intended to cause permanent blindness is holding up nicely. Weapons systems that make their own decisions are a very different, and much broader, category. The line between weapons controlled by humans and those that fire autonomously is blurry, and many nations—including the US—have begun the process of crossing it. Moreover, technologies such as robotic aircraft and ground vehicles have proved so useful that armed forces may find giving them more independence—including to kill—irresistible.”

In an article for The Conversation, Jai Galliott wrote: “We already have weapons of the kind for which a ban is sought. UN bans are also virtually useless . . . ‘bad guys’ don’t play by the rules.”

To access the second half of this Issue Report Login or Buy Issue Report

To access the second half of all Issue Reports Login or Subscribe Now